Nick Bostrom is a Swedish philosopher, Professor at Oxford University, co-founder of the world Association of transhumanists and Director established in 2005 in the Oxford future of humanity Institute. He tries to understand the problem that humanity faces at the prospect of the emergence of the supermind. What will happen when machines surpass humans in intelligence? Will they help us or will destroy humanity? Can we ignore the problem of the development of artificial intelligence and to feel safe? Nick Bostrom describes complicated scientific questions about the future of humanity in accessible language.

With the permission of the publishing house “Mann, Ivanov and Ferber” “Tape.ru” publishes an excerpt from the book nick Bostrom “Artificial intelligence”.

The overmind can have a giant opportunity to according to his purpose to change the future. But what are these goals? What are the aspirations? Will depend on the degree of motivation of the supermind from the level of his intellect?

Let’s say two points. The notion of orthogonality reads as follows (with some exceptions) that you can combine any level of intelligence for any purpose, because intelligence and final goals are orthogonal, i.e. independent, variables. The thesis of instrumental convergence States that a super-intelligent actors, or agents — in the broad variety of their ultimate goals — however, will pursue a similar intermediate goals, since all agents will have the same instrumental reason. Considered together, these abstracts will help us to be more clear what are the intentions of super-intelligent actor.

The link between intelligence and motivation

In the book already sounded a warning against the error of anthropomorphism: we should not project human qualities on the capabilities of Superintelligent agent. We repeat his warning, only replacing the word able with the word motivation.

Before to develop the first point, will do a little preliminary investigation on infinity the whole spectrum of possible minds. In this abstract, almost cosmic, space possible the human mind is a negligible cluster.

We choose two representatives of the human race, which according to General opinion are diametrically opposite personalities. Let it be Hannah Arendt and benny hill. The difference between them we will probably rate as the maximum. But we will do so only because our perceptions are entirely governed by our experience, which, in turn, relies on existing human stereotypes (to a certain extent we are influenced and fictional characters, created again a human fantasy to meet all of the same human imagination).

However, changing the scale of the review and looking at the problem of the distribution of intelligence through the prism of the infinite space of the possible, we will be forced to admit that these two persons is not more than virtual clones. At least from the point of view of the characteristics of the nervous system Hannah Arendt and benny hill are virtually identical.

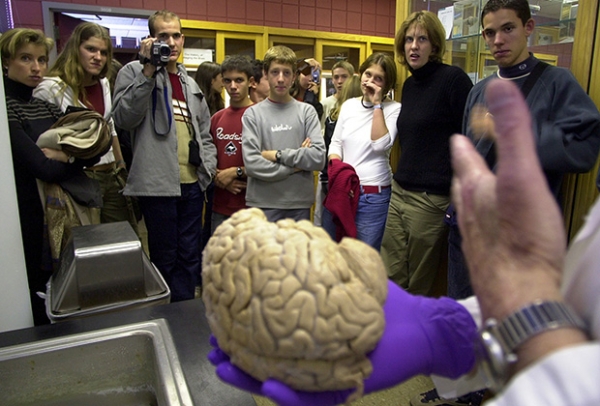

Suppose the brain and one or the other would put next in the silence of a Museum, — seeing this exhibition, we just say that these two belong to one and the same species. Moreover, who of us would be able to determine which brain Hannah Arendt, and some benny hill? If we were able to study the morphology of both the brain, we were finally convinced in their fundamental similarity: the same plate architectonics of the cortex; the same areas of the brain; the same structure the nerve cells within the brain with its neuron neurotransmitters the same chemical nature.

Photo: David Duprey / AP

Despite the fact that the human mind is almost comparable to indiscernible point, floating in the infinite space of the anticipated reasonable lives, there is a tendency to project human properties on a variety of alien entities, and artificial intelligent system. This motif is superbly commented Eliezer Yudkovskaya all in the same paper “Artificial intelligence as a positive and negative factor in global risk”:

“During the heyday of popular science fiction, pretty cheap properties, covers of magazines were full of pictures, which another alien monster popularly known as “bug-eyed monster” — once again somewhere dragged another beauty definitely bullied in dress and beauty was ours, the earth, woman.

It seems that all artists believe that non-humanoid aliens with a completely different evolutionary history must necessarily experience sexual attraction to women of the human race. <…> Most likely, the artists, depicting all of this, not even wondered, and would it ever be a giant beetle is sensitive to the charms of our women. After all, in their view, any semi-Nude woman by definition sexually attractive, that is, to feel her desire was an integral feature of the masculine members of the human race.

All the artistic attention was directed to the bullied or the torn dress, the last thing they cared about was how the consciousness of the giant insectoids. And it was a major mistake artists. Don’t be clothes ripped, they thought, women have seemed less tempting for bug-eyed monsters. It is a pity, the aliens never took it in good”.

Perhaps the artificial intelligence of their drivers even less will remind a person than a green scaly space alien. Aliens are biological creatures (nothing more than a guess) that appeared as a result of the evolutionary process, by virtue of what we can expect from them motivation, to some extent, typical of evolved creatures.

It is therefore not surprising if it turns out that the motives of intelligent alien dictated a fairly simple interests: food, air, temperature, danger of bodily injury or the occurrence of any injury, illness, predation, sex, and breeding kittens. If aliens belong in any reasonable society, they could develop motives related to cooperation and competition. Like us they would show devotion to the community, would be resented by parasites and, who knows, would be not devoid of vanity, worrying about his reputation and appearance.

Nick Bostrom

Photo: nickbostrom.com

Thinking machines by nature, unlike aliens, there is no reason to worry about such things. It is unlikely you will find a paradoxical situation, if there is any AI whose sole purpose, for example, to count grains of sand on the beaches of Boracay, indulge in number of π, and to represent him, at last, in the form of common decimal fractions; to determine the maximum number of paper clips in the light cone of the future.

Actually much easier to create AI, in front of which will stand a definite goal, and not try to force our value system, giving the machine human qualities and motives. Decide for yourself which is harder: to write a program, which measures, how many digits after the decimal point in the number π computed and stored in memory, or to create an algorithm that reliably takes into account the degree of achievement is absolutely important for humanity purpose, say, like world of universal prosperity and universal justice?

How sad, but it is easier to write code simplified, devoid of any substance targeted machine’s behavior and to teach her how to perform the task. Most likely this fate will choose for the embryo the AI programmer will be focused on the desire “to get the AI to work” as soon as possible (programmer, obviously not anxious, what exactly have to deal with AI, in addition to stunning demonstrate reasonable behavior). Soon we will return to this important topic.

Intelligent search tool optimal plans and strategies possible in the event of any goal. Intelligence and motivation are in a sense orthogonal. Present them in the form of two coordinate axes defining a graph in which each dot represents a logically possible an intelligent agent. However, this picture will require a few clarifications.

For example, for systems that are not endowed with reason, it would be impossible to have too complicated of motivation. So we can justifiably say that, they say, such an agent “has” such-and-such a set of motivations, these motivations should be functionally integrated together with the decision-making process, which imposes certain requirements on memory, processing power and possibly intelligence.

The intelligence capable of samorealizatsii, most likely, will be observed limiting dynamic characteristics. And then to say: if we teach to modify the thinking machine suddenly experience a burning desire to become foolish, pretty soon she will cease to be intelligent. However, our observations did not cancel the main thesis on the orthogonality of intelligence and motivation. Submit it for your consideration.

The notion of orthogonality

Intelligence and final goals are orthogonal: more or less any level of intelligence can in principle be combined with more or less any final goal.

This may seem controversial because of its apparent similarities to certain assertions, although belonging to classical philosophy, but is still causing a lot of questions. Try to take the notion of orthogonality in a more narrow sense — and then it will be quite accurate.

Note that the notion of orthogonality is not talking about rationality or sanity, but only about intelligence. Under the intelligence we understand the skills of forecasting, planning and matching of ends and means in General. Instrumental cognitive efficiency becomes especially important feature when we start to understand the possible consequences of the emergence of artificial superintelligence. Even if you use the word rational in this sense, which excludes the recognition of rational Superintelligent agent, counts the maximum number of clips is in any case does not exclude the presence of his outstanding abilities to the instrumental thinking, abilities that would have a huge impact on our world.

In accordance with the notion of orthogonality in artificial agents can be objective, deeply alien to the interests and values of humanity. However, this does not mean that it is impossible to predict the specific behavior of artificial agents — and even a hypothetical Superintelligent agents, cognitive complexity and performance characteristics which can make them in some aspects “impervious” to human analysis. There are at least three ways through which you can approach the challenge of predicting the motivation of supermind.

Photo: Richard Brian / Reuters

1. Predictability at the expense of design. If we can assume that the programmers are able to develop a system of goal-setting Superintelligent agent so that it will consistently seek to achieve the goal set by its creators, then we are able to make at least one prediction: this agent is to achieve its goal. And the more intelligent the agent will be, the greater intellectual ingenuity he will start to seek. Therefore, before the creation of the agent we could predict anything about his behaviour if he knew something about its creators and the goals that they are going to install.

2. Predictability through inheritance. If the prototype digital intelligence directly serves as the human mind (which is possible with full emulation of the human brain), then the digital intelligence can be inherent in the motives of his human prototype. Such an agent could store some of them even once his cognitive skills will develop so that it will become the overmind. But in such cases care should be taken. The goal of the agent can easily be distorted in the process of downloading data of a prototype or during their further processing and improvement — the probability of such a development depends on the organization of the procedure of the emulation.

3. Predictability due to the existence of convergent instrumental reasons. Even without knowing in detail the ultimate goals of the agent, we are able to draw some conclusions about a more close targets, analyzing the instrumental causes of a variety of possible final goals in a variety of situations. The higher cognitive abilities of the agent, the more useful it becomes this method of forecasting, because the more intelligent an agent is, the more likely that it will recognize the true instrumental causes of their actions and to act so that at any likely situation to achieve their goals. (For a correct understanding it should be noted that there may be unavailable to us now instrumental reasons that the agent finds only after reaching a very high level of intelligence, this makes the behavior of Superintelligent agent less predictable.)